Transforming Pharma Supply Chains with Agentic AI: How a Global Distributor Gained Real-Time Insight Across Complex Systems

In the pharmaceutical distribution industry, timely access to accurate product and logistics information is not just a competitive advantage, it is a necessity. Companies operating at scale must manage complex supply chains, stringent regulatory requirements, and increasing demands from both customers and partners for transparency and responsiveness. Yet, many organizations still rely on fragmented systems and manual processes to piece together critical data, leading to inefficiencies, delayed responses, and missed opportunities.

This blog presents a real-world implementation of an agentic AI solution developed for one of the world’s largest providers of medicines, pharmaceutical supplies, and healthcare IT services. Faced with the challenge of navigating vast, distributed data sources, from Warehouse Management Systems (WMS) and Enterprise Resource Planning (ERP) systems to Electronic Product Code Information Services (EPCIS), our client sought to streamline how supply chain information could be queried and consumed across the organization.

We designed and delivered an intelligent, LLM-powered chatbot that enables users to ask complex questions in natural language and receive fast, accurate, and contextual answers. Built on a robust architecture combining LlamaIndex agents, workflows, and retrieval-augmented generation (RAG), the solution empowers both technical and non-technical users with unprecedented access to critical supply chain data, enhancing efficiency, transparency, and decision-making capabilities.

With our AI chatbot—built on LlamaIndex and MongoDB, managers, customer service reps, and logistics coordinators can easily query complicated supply chain data. Simply ask a question like “What’s the status of purchase order #12345 for Customer XYZ, including the serial numbers shipped yesterday?” and get an accurate response in seconds.

The following sections outline the client’s challenges, the solution design and architecture, and the tangible outcomes this AI system has delivered.

Project Background

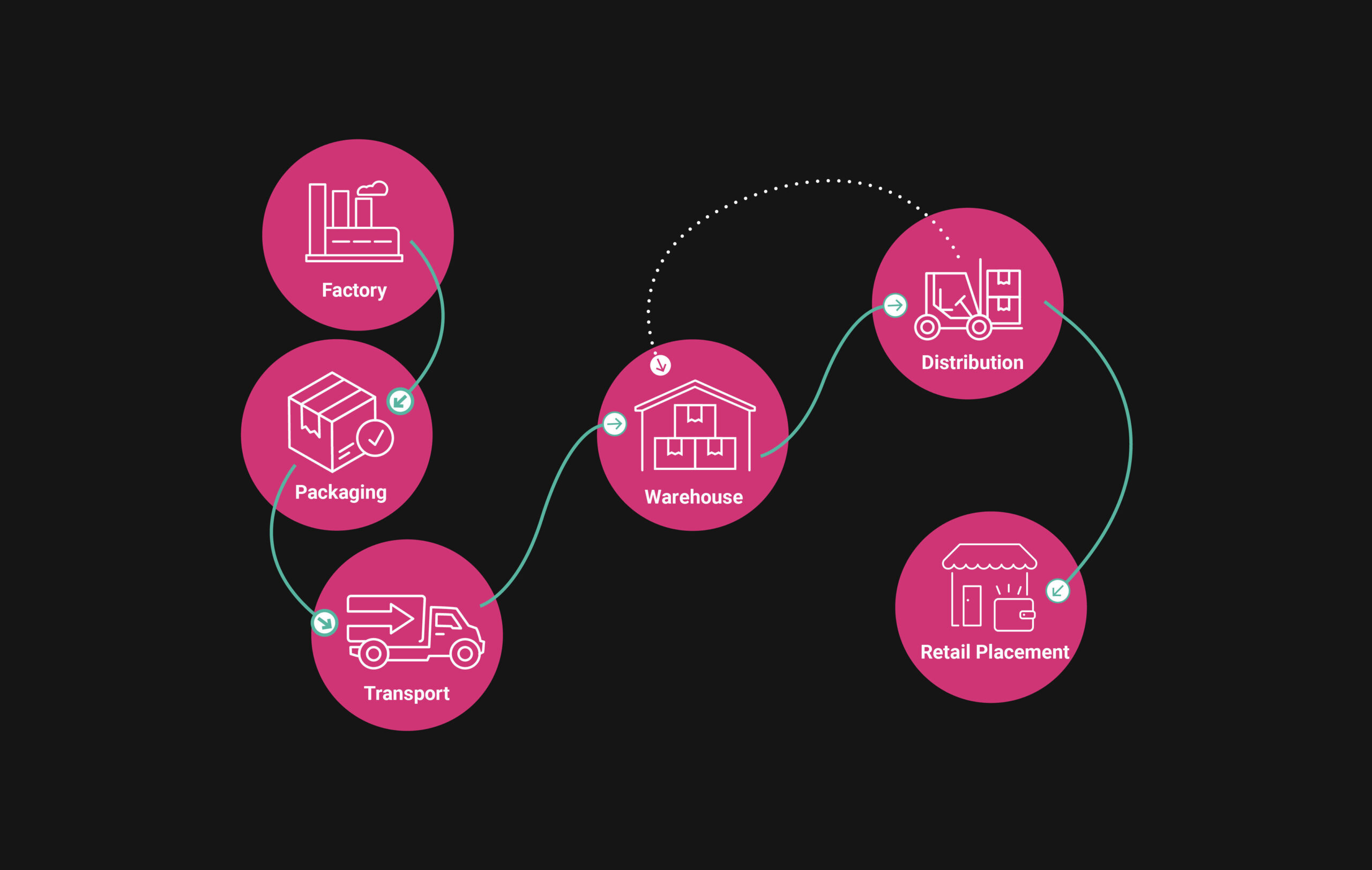

One of the largest providers of medicines, pharmaceutical supplies, and healthcare IT solutions was facing significant challenges in tracking and accessing real-time distribution data across its supply chain. Their operations span multiple stages, from manufacturing and wholesaling to pharmacy-level dispensing. They involve complex processes such as commissioning, aggregation, shipping, disaggregation, and decommissioning, as shown in the distribution workflow.

The company relies on a wide array of systems, including Warehouse Management Systems (WMS), Enterprise Resource Planning (ERP), and Electronic Product Code Information Services (EPCIS), each generating large volumes of data. However, accessing insights such as product provenance, current location, and distribution flow required manually extracting and reconciling data from disparate systems. This manual effort resulted in delayed responses, reduced operational efficiency, and limited supply chain visibility.

To address these challenges, we delivered an agentic AI solution to intelligently orchestrate data retrieval, correlation, and interpretation, streamlining access to critical distribution information across their supply chain.

Key Challenges

- Data complexity and integration: The data model represented a complex, nested structure combining event timelines, product specifics, and customer details. Effectively querying across these interconnected data points was non-trivial. For instance, queries like “Tell me what you know about lot number X” or “Where was this serial number last shipped from?” required precise extraction across multiple document layers and relations, something that traditional querying methods could not easily handle.

- Diverse query needs: The system had to answer complex and difficult-to-interpret questions. Additional information was required on how the response should be structured and how to retrieve the necessary data.

- Natural language querying for non-technical users: The primary goal was to enable non-technical users to interact with the data using natural language, eliminating the need for specialized query languages.

- Real-time access and performance: Answers needed to be delivered quickly to support real-time operational decisions and customer service interactions, requiring efficient data retrieval and processing. For example, “What percentage of data did customer X receive today?” or “From where this serial number was last shipped?” These required rapid processing of current data with minimal latency to ensure responsiveness for operational and customer service teams.

- Scalability: The solution needed to handle a growing volume of data and query types without performance degradation.

- Context size: Some questions need access to large amounts of data that might exceed context size limits. The challenge is to extract and show only the data that is relevant to each specific question.

Proposed Solution Based on LlamaIndex

We architected an AI-powered chatbot solution with LlamaIndex as the central framework to tackle these challenges. LlamaIndex provided the essential toolkit for integrating Large Language Models (LLMs) with the enterprise’s private supply chain data stored in MongoDB, enabling sophisticated Retrieval-Augmented Generation (RAG) and agentic capabilities.

LlamaIndex is a data framework for building LLM applications. It excels at connecting custom data sources to LLMs, offering components for data ingestion, indexing, querying, and agentic reasoning.

Key LlamaIndex concepts and components leveraged in this solution include:

- Workflow: The solution is built upon the workflow concept embedded in LlamaIndex. A workflow is an event-driven, step-based approach to controlling the execution flow of an agentic application. The application is divided into sections called Steps, which are triggered by Events and emit Events that trigger further Steps. By combining Steps and Events, we could create arbitrarily complex flows that encapsulated logic, making our application more maintainable and easier to understand.

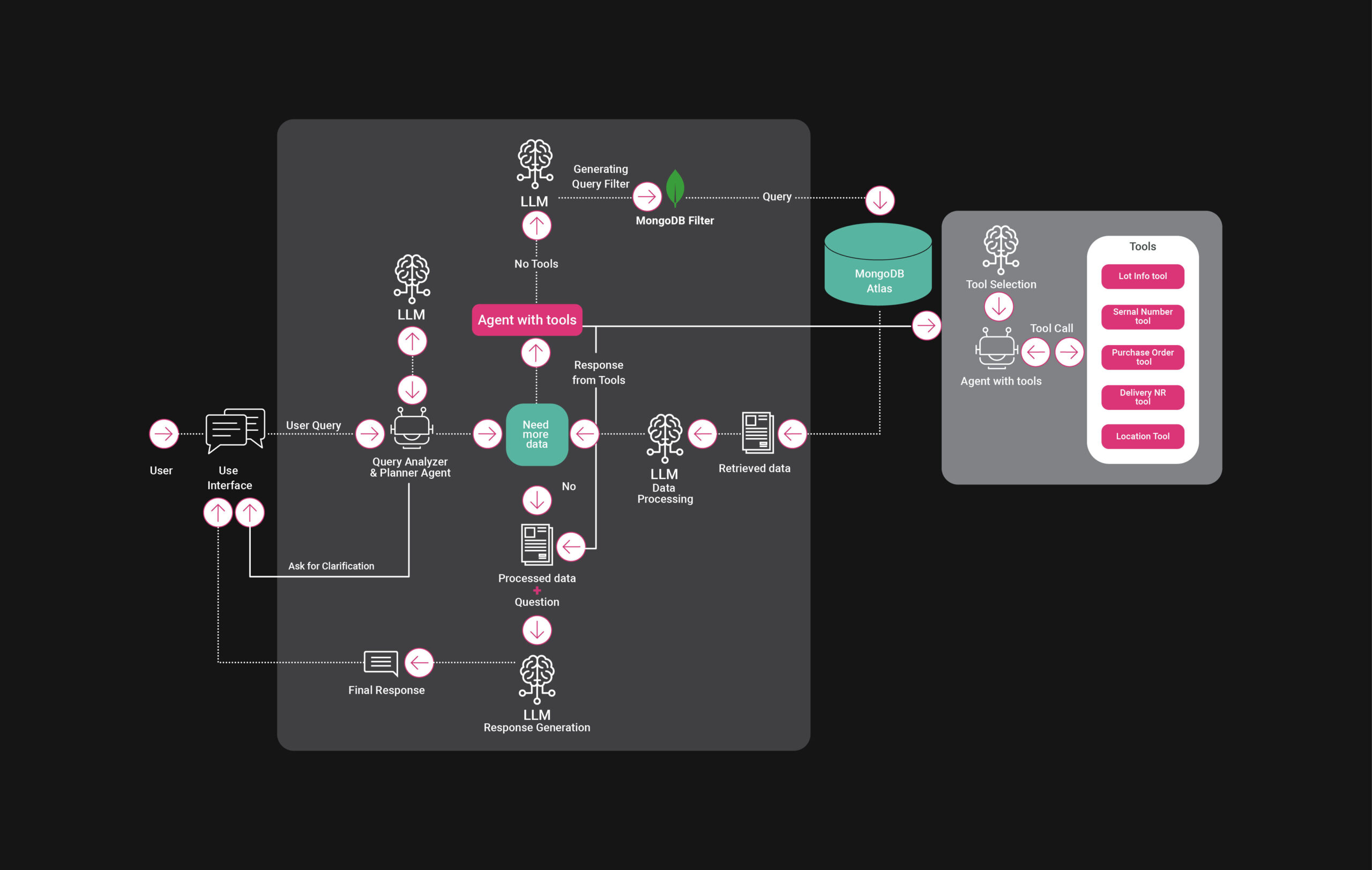

- Agent framework: LlamaIndex provides robust abstractions for building agents capable of complex reasoning and tool usage. We specifically implemented a ReActAgent (Reasoning and Acting) configured for supply chain tasks.

- Tools: The framework allows seamless integration of custom “tools”—specialized functions the agent can call to perform specific actions. We developed several custom tools for targeted data retrieval from MongoDB.

- LLM abstractions: LlamaIndex provides consistent interfaces for interacting with various language models.

- Structured output: Capabilities to parse LLM responses into structured formats (like Pydantic models) were crucial to create deterministic responses

LlamaIndex was chosen for several compelling reasons:

- Sophisticated agent capabilities: The framework’s support for agent types like ReAct allowed us to build an intelligent agent that could reason about user queries and decide which specialized tool to use.

- Flexible tool integration: Defining and integrating custom tools tailored to specific MongoDB query patterns for supply chain data was straightforward.

- LLM agnosticism and integration: We can easily integrate with our chosen LLM provider and potentially switch or use multiple models.

- Structured data handling: Features facilitating structured output from LLMs were vital for translating natural language into precise database queries and extracting specific attributes.

- Developer experience: The Python-native framework and clear abstractions accelerated development and allowed for building a robust, maintainable solution.

Solution Overview

The resulting solution is an intelligent AI chatbot. It empowers users to ask complex questions about supply chain operations in natural language and receive accurate, context-aware answers.

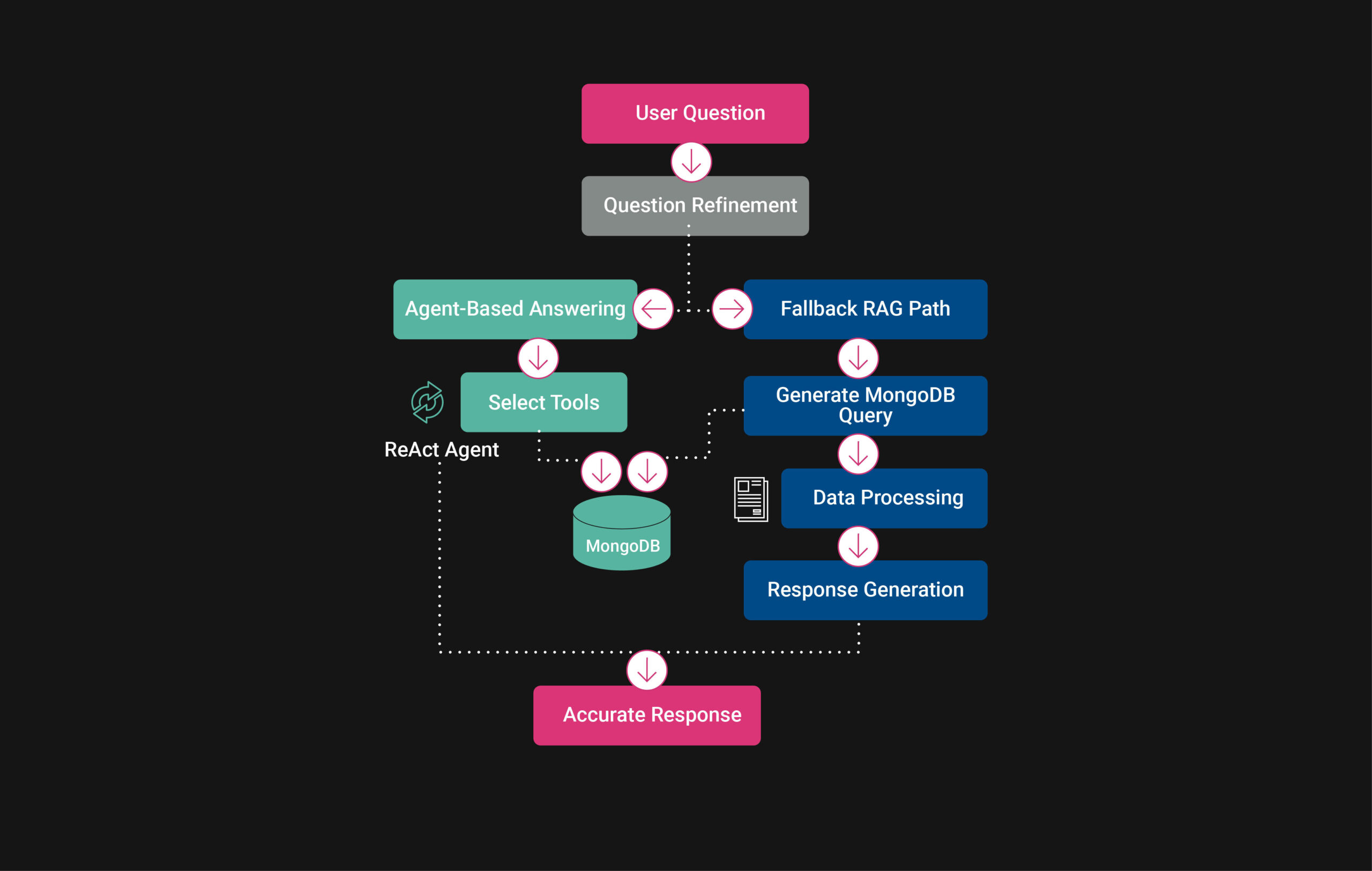

The system comprises two main operational paths orchestrated by a central workflow:

- Agent-Based Answering: An initial attempt is made using a LlamaIndex ReActAgent equipped with specialized tools. The agent analyzes the user’s refined question and selects the appropriate tool(s) to retrieve specific data from MongoDB, synthesizing an answer.

- Fallback RAG Path: If the agent determines it cannot answer or its initial response is deemed unhelpful, the system falls back to a more comprehensive RAG pipeline. This involves:

- Translating the natural language question into a precise MongoDB query using an LLM aware of the database schema.

- Executing the query against the MongoDB collection.

- Processing, flattening, and extracting relevant attributes from the retrieved documents using LLMs.

- Synthesizing a final answer based on the processed data and the original question, again using an LLM.

This dual approach ensures both efficiency for common queries handled by tools and robustness for complex or novel questions requiring deeper data retrieval and synthesis.

Integration with Third-Party systems via agent tools

While the core chatbot operated on data stored in MongoDB, much of that data originated from various third-party systems such as EPCIS and ERP platforms. Some data pipelines ingested and transformed data from these external systems into MongoDB, ensuring the chatbot had access to the latest operational insights. However, the architecture is not limited to static ingestion, LlamaIndex’s agent framework also allows for real-time access to external systems through custom tools. By leveraging the Model Context Protocol (MCP), it’s possible to write agent tools that query third-party APIs or services directly during runtime, enabling dynamic data access when needed. This opens the door for future extensions where the agent could retrieve live information from systems not covered by the ingestion pipelines, making the solution even more responsive and adaptable.

Support for On-Premise and private deployments

The architecture was intentionally designed with flexibility in mind to accommodate a variety of deployment models, including highly secure, on-premise environments. For clients requiring full data privacy and regulatory compliance, the solution can be deployed with a private LLM instance (e.g., via Azure OpenAI, AWS Bedrock, or local LLM hosting using open-source models) and a private vector store hosted within the client’s infrastructure. LlamaIndex supports pluggable backends, so components like vector search and document storage can be easily configured to run on-prem using MongoDB or other self-hosted databases. The entire workflow, including data ingestion, tool execution, and RAG pipelines, can be containerized and orchestrated within a private cloud or on-prem Kubernetes cluster, ensuring no data leaves the client’s controlled environment while maintaining full agentic and conversational capabilities.

Solution Architecture

The architecture is based on the LlamaIndex Workflow concept, which in our case consists of the following steps:

Question Refinement: Each incoming user query is first analyzed by an LLM using LlamaIndex abstractions. The model interprets the current question in the context of the ongoing conversation, resolving ambiguities and incorporating relevant details from previous interactions. The result is a more precise and context-aware version of the original question. This refined question significantly improves the performance of both the agent and the fallback RAG path.

An agent with Tools: The LlamaIndex ReActAgent attempts to answer user queries using its specialized tools. It reasons about the query, selects the best tool(s), executes them, and formulates a response.

Natural language query to MongoDB query – This process uses the LLM’s understanding of input query in natural language and the MongoDB schema to generate a precise database query.

Retrieval – Executes the MongoDB query and retrieves the required data.

Data processing – Documents are flattened, and key attributes are extracted using LLMs. If the context is too large, the system detects this and prompts the user to refine their question to narrow the scope.

Response Generator – The refined question and extracted context are passed to the LLM, which generates the final response.

Achievements and Outcomes

Increased operational transparency: The solution allowed stakeholders to track the movement and status of pharmaceutical products, enhancing visibility throughout complex supply chain operations and increasing confidence in inventory accuracy and compliance.

Improved customer service performance: Customer service teams can now respond faster and more accurately to inquiries about orders, deliveries, and product information, leading to improved SLAs and customer satisfaction scores.

Reduced manual workload for teams: By automating the data correlation process across multiple systems, the solution significantly decreased the time analysts and support teams spent searching for information, allowing them to focus on higher-value tasks.

Accelerated decision-making: Real-time access to actionable insights allowed operational managers to make quicker, data-driven decisions related to inventory movement, product recalls, or delivery tracking.

Enhanced user experience: Improved the user experience by replacing complex data retrieval processes with an intuitive, conversational chat interface.

Scalable and Future-Proof Architecture – Built on LlamaIndex, Llama Agents, and workflows, the architecture is scalable and adaptable, ensuring long-term robustness as future requirements evolve.

Foundation for Future Enhancements: The robust RAG and agentic framework provides a solid base for adding new capabilities, integrating more data sources, or developing proactive alerting features.

Conclusion

This AI chatbot solution advances the handling of supply chain data. We built a tool that alters user interaction with logistics information by integrating LlamaIndex’s agentic and Retrieval-Augmented Generation (RAG) capabilities with MongoDB’s data management. The system processes natural language questions, refines queries, utilizes specific tools for data retrieval, and synthesizes responses from different data sources, overcoming previous data complexity issues and manual lookups. This provides faster, more efficient access to information for staff and improves the user experience by substituting difficult procedures with a conversational interface. The project demonstrates the value of applying AI frameworks like LlamaIndex to enterprise data, creating a scalable architecture prepared for future needs. This ultimately supports operational efficiency and data-driven decision-making within the pharmaceutical distribution sector.