Harnessing OpenAI: Unlocking the Potential of Large Language Models

Introduction

With AI becoming a household phrase and a business necessity, we explore how one gravity9 client leveraged its strengths to shrug off cumbersome manual processes and enjoy increased efficiency, improved data quality, better service delivery and savings in both time and resources.

Background Context

Our client, one of the largest rental property providers in the United States, owns many properties. These form communities, governed by Homeowner Association (HOA) bodies which set local rules, responsibilities, and restrictions for property owners.

In our clients’ case, there are over 15,000 HOAs to deal with who each have documentation which can vary significantly, with documents having:

- A differing legal style, as well as different rules and regulations.

- In some cases, later addendums which override earlier rules and regulations.

- Varying quality (photocopies contained in PDFs, or even printed by typewriters in some cases).

Scope of Impact and the Problem

To ensure customer satisfaction, drive business effectively and keep costs and resources managed efficiently, our client must have accurate knowledge of HOA regulations for any given property.

This is especially important during:

- Lease Contract Negotiations. For example, a customer might wish to know if pets are allowed, and how many. They might wish to know if their trailer can be parked within the community.

- During Revenue Enhancement. The property owner needs to understand if commercial signs can be put erected, advertising that the property is available to rent (and if there are any restrictions around this).

- Property Maintenance. Are any permits required from the HOA when maintenance is being performed?

- Property Ownership. A property renter (resident) might perform an action that violates HOA regulations, resulting in a fine to the property owner. Such fines can be contested in some cases, but if it is only reported to the property owner via slow postal mail, leaving less time to contest said fine.

Resident Managers, Leasing Agents, District Maintenance Managers and Legal Teams are involved during each of the stages of property lifecycle. They all must be aware of the HOA regulations governing the property they are involved with.

Traditionally, specific HOA documentation would need to be searched manually (taking into account the above differences in format, legalities etc.) and relevant regulations identified “by hand”, with multiple inquiries per day. This left scope for delays and inaccuracies which could lead to unacceptable risk and cost (e.g. fines from said HOA for any breach of regulations).

Our Technical Solution

Our client sought to expand thanks to ongoing success, but in a way which allows business safe, efficient operation. Thankfully, recent advances in AI are ideal for providing an answer here, in the form of Large Language Models (LLM) from OpenAI GPT, and others like Meta Llama, Google Gemini, AWS Titan, Anthropic and xAI Grok.

These LLM are extremely good at working with, and understanding, written text. This makes them ideal technology to search documentation, extract regulations and apply reasoning to them, providing our client’s employees with the information needed in the correct context.

Our approach to a solution was made up of several components:

- Large Language Model (LLM)

We opted for one of the best known and developed LLM’s; OpenAI ChatGPT (using the GPT-3.5 Turbo model) and – as a Microsoft Partner implementing our solution onto our client’s Azure technology stack, we used Azure OpenAI hosted models. Together these provided benefits of being robust, fast, and cost-effective solutions.

- Retrieval Augmented Generation (RAG)

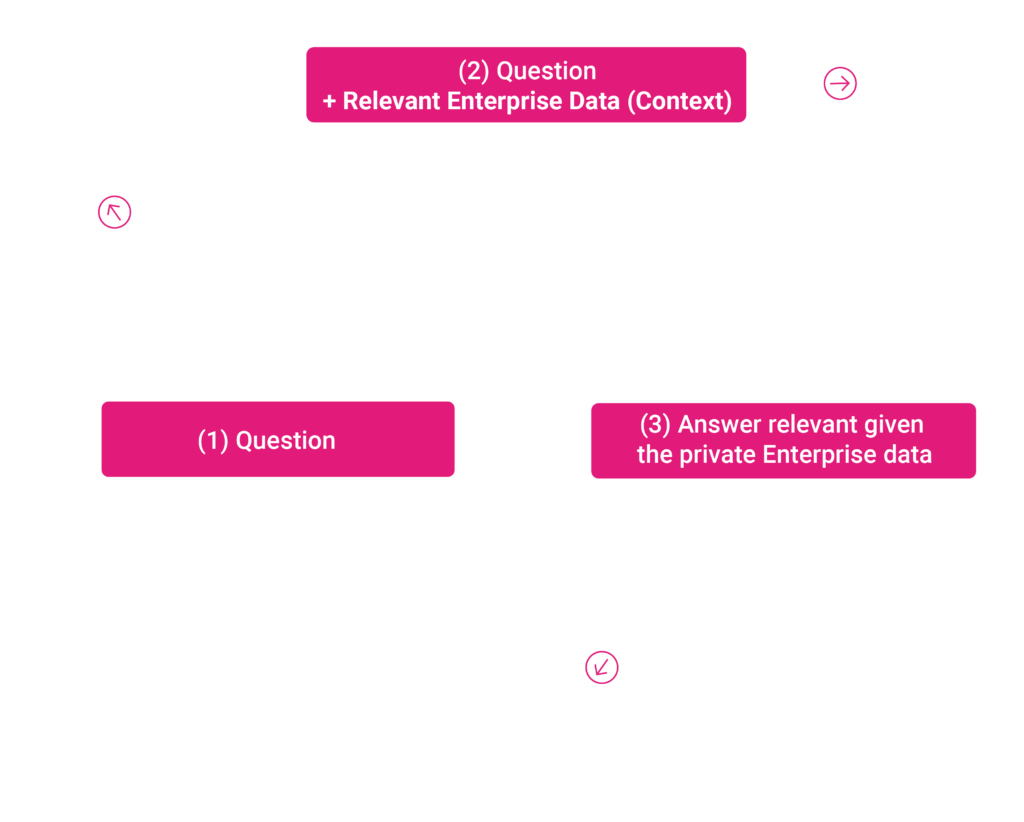

While LLMs are great at working with text, they do not know much about proprietary enterprise data, in part as they are trained on public data (available on the internet or in libraries). In the case of GPT-3.5 Turbo, its knowledge cut off is September 2021.

So how do we make privately owned information available to the LLM knowledge base while keeping it private (not public)?

The answer is Retrieval Augmented Generation (RAG). This is a technique which allows the feeding of relevant contextual information to the LLM to generate a text response (GenAI). While the idea is simple in principle, the proper prompt and relevant contextual information that is fed into the LLM is key to achieving superior results. When ineffective data is provided then the response accuracy is low, and the model could start to hallucinate and offer up false information.

To streamline the development of a such a context-aware system and make prompt engineering more efficient, we used LangChain. LangChain is an open-source framework for building applications based on LLMs (which can include chatbots, question-answering, content generation, summarization and so on).

Another reason we took this approach is Microsoft’s robust approach to data privacy. Any prompts or information sent to the Azure OpenAI service will not be stored by Microsoft for training purposes, nor shared with other 3rd parties. This gave our client assurances that their confidential data would remain private and not be made available publicly or to competitors.

- Text Embedding and Vector Search

Deep Neural Network (DNN) operate on vectors as inputs and perform matrix calculations during inference. Embedding models are algorithms trained to encapsulate information into vector or dense representations in a multi-dimensional space.

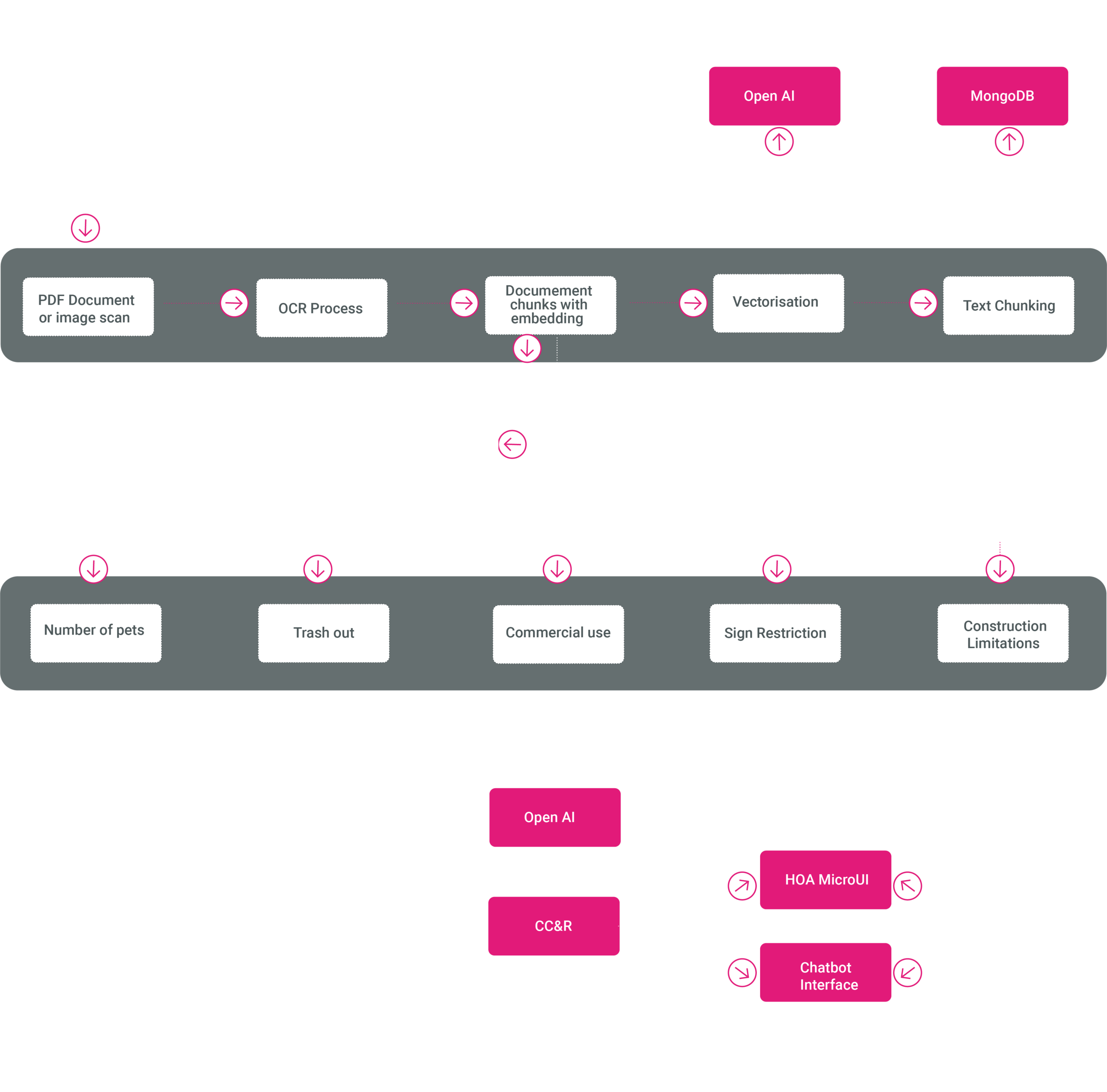

In our case we used the “text-embedding-ada-002″ embedding model from Azure OpenAI.

The vector output of the text embedding allows the relation of text (in the private document/data) against the question in the prompt by calculating a distance between these vectors, telling us how related these two sentences are. Semantic meanings and synonyms are also considered, and being able to find related fragments of text sentences works nicely with databases that support Vector Search.

Text paragraphs of the HOA’s documents were stored in the vector database, along with their embedding. This is the indexing process, in which the loaded content is analysed and converted into a suitable format for fast retrieval. From this, vector searches can be run against the prompt, enabling the RAG technique described above.

Many databases now support Vector Search (MongoDB Atlas, Azure CosmosDB, Azure AI Search etc.) and we selected MongoDB Atlas as it is proven to be reliable, scalable and works very well for AI workloads such as our project here.

In the case of the HOA documentation our client is concerned with, changes are rare and so text extraction and vectorisation only needed to be done in full once, with subsequent small updates where necessary. The resulting solution can be made efficient, cost effective, and with low resource demand overheads.

- Prompt Engineering

Prompt Engineering is a set of patterns and recommendations to interact with LLMs (prompt them) that were formulated to increase the accuracy and performance of the LLM response for the given use case. Think of them as patterns in any other software engineering context.

With contextual information available from the private documents in the form of a vector database, along with the selected LLM and embedding model, we implemented the solution. We created several iterations to increase performance and ensure no hallucinations in the generated text response.

In this first phase, our primary goal was to extract relevant regulations and rules from HOA documentation and break it down into categories which might be needed (landscaping, construction, maintenance, restrictions around pets, parking, signage etc.). On top of this we ensured summaries were provided (making queried information concise and appealing to end users).

- Other (UI and Additional Integrations)

An improved user interface was also developed, to be used by Resident Managers and Leasing Agents. We used the micro-frontend and micro-services architecture, meaning this new functionality could be implemented independently without disturbing development teams working on other areas of the product.

Since many source documents were low resolution scans of printed information, we used Azure AI Document Intelligence to extract text to provide accurate, useful data from which to work.

The overall architecture gravity9 delivered can be viewed below:

With this solution there’s scope for additional development; the next phase will implement a chat bot type experience (for example: a Resident Manager can ask the AI questions around the HOA documents) based around Chain of Thought or Prompt Chaining.

Conclusion

Thanks to AI technology like LLM and RAG, organisations can enjoy huge boosts in efficiency, accessibility, cost effectiveness, quality of service, and security. What was once only available to a handful of elite AI researchers is finally becoming universally available and, in many cases, businesses simply cannot afford to ignore it.

As an experienced modernisation consultancy, gravity9 are perfectly positioned help your organisation leverage AI to ensure your business remains not just competitive, but ahead of the curve. Get in touch and we would be happy to discuss your needs.